Enterprise Generative AI Market Remains Largely Untapped

|

NEWS

|

Although generative Artificial Intelligence (AI) continues to develop rapidly, most solutions remain focused on the consumer market. The enterprise market remains largely nascent with limited deployments that only address a handful of low-hanging use cases. This is mainly a result of limited internal enterprise Machine Learning Operations (MLOps) skills and that many Independent Software Vendors (ISVs) have not quite caught up with the market since OpenAI’s release of ChatGPT in 4Q 2022.

Given the high costs associated with AI Research and Development (R&D), hosting, and operations, vendors across the value chain must start monetizing the enterprise segment. Historically, market growth has been stimulated by software development accessibility. For example, visual coding such as visual C++ and Visual Basic triggered the adoption of Personal Computer (PC) devices and applications in the enterprise sector; Java script, HTML, and CSS languages boosted the development of web-based applications and usage; and Apple’s Software Development Kit (SDK)/Androids programming languages unlocked smartphone growth. ABI Research expects the enterprise AI market to follow a similar pattern. Vendors must remove barriers to development and provide greater incentives to trigger enterprise AI growth.

Some recent market activity has reflected this. OpenAI released GPTs, which enables customers to create custom versions of ChatGPT tailored for specific topics. This platform utilizes natural language prompts and requires zero coding. It also offers incentivizes by providing monetization opportunities and supports enterprise customers by allowing internal-only GPT creation. Others have also been active in this area. NVIDIA’s newly released enterprise foundry service provides the models, tools, and platform necessary for enterprise developer experimentation and application deployments. AWS has created PartyRock, a platform that enables developers to experiment with foundation models and low/no-code tools. Intel has built the PC AI Acceleration Program that brings AI toolchains, co-engineering, hardware access, technical, and co-marketing opportunities to ISVs.

Vendors Must Lower Barriers to Development and Engage More Deeply to Drive Enterprise AI Monetization

|

IMPACT

|

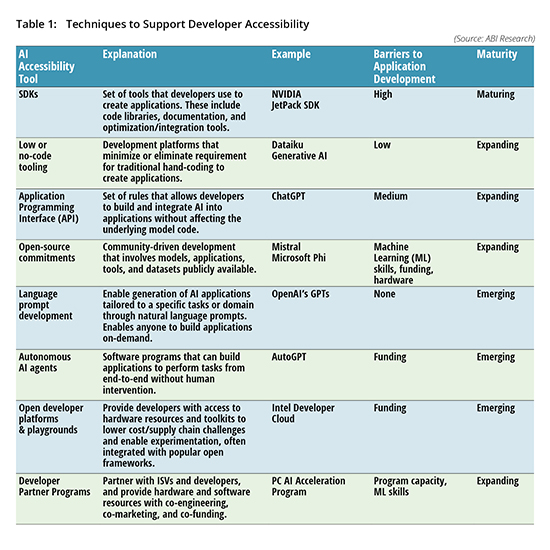

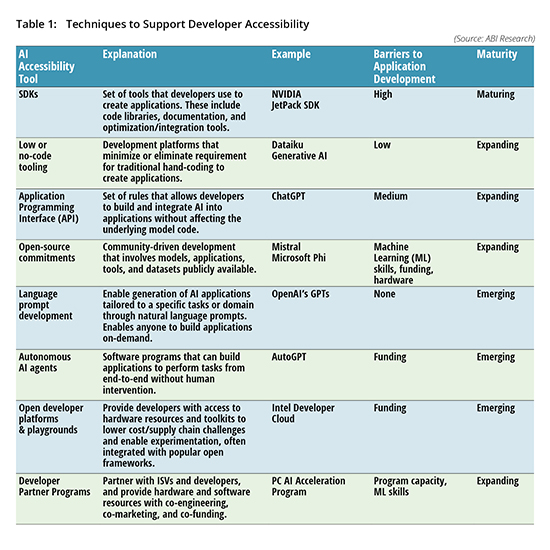

These platforms and programs are certainly a step in the right direction, but more still needs to be done across the entire value chain (chip vendors, model developers, hyperscalers, data platforms). Table 1 provides a breakdown of the key techniques that stakeholders should be employing to increase developer accessibility, and drive enterprise AI deployment.

Open Ecosystem Support Offers Direct and Indirect Enterprise Monetization Opportunities

|

RECOMMENDATIONS

|

Investing in open models, tools, platforms, and libraries will be one of the most effective ways to trigger the development of enterprise-ready applications, but some ask the following question: “why should we invest in the open ecosystem, if there is no clear link with product monetization?” On the surface, this seems like a fair objection given the cost of AI R&D; but if we dig below, one can see direct and indirect links between open ecosystem support and monetization, some of which are explored below:

Direct:

-

- Open Core: Offering feature-limited product for free, and customers pay for commercial versions. This can also be delivered in a Software-as-a-Service (SaaS) model through which commercial entities pay per service used beyond the core open products.

- Customer Monetization Targets: Choosing a select group of customers that have to pay for access; normally, those deploying the product commercially. For example, hyperscalers have to pay to access Meta’s Llama 2 through its AI platforms.

Indirect:

-

- Showcase AI Leadership: Supporting market perception of company R&D by contributing and impacting open-source development. This can help drive customer engagement in other areas that have direct monetization strategies. It can also help drive investment funding and attract commercial partnerships that can build monetization strategies.

- Product Development and Differentiation: Utilize open innovation to build Intellectual Property (IP) and support wider product strategy. Open-source support can also accelerate application development by lowering barriers; for example, Meta’s Llama models used in Quest 3, Intel’s OpenAPI supporting developers in building applications for PC AI.

- Internal Tools: Developing in open source to benefit from low-cost community innovation, and then deploying models, tools, and applications internally. For example, Meta uses Llama for internal use cases, which has benefited from community innovation.

- Competitor Differentiation: Develop strong open application ecosystem around products/services to mitigate competitive pressure. Chip vendors will use this strategy to differentiate their proposition from NVIDIA by contrasting an open proposition with CUDA’s largely closed approach.

- Building Captive Audience and Customer Share: Enabling access to resources (models, chips) can help build brand stickiness and lead to upselling in the future.

As we move into 2024, developer accessibility, choice, and freedom will be hot topics as vendors look to stimulate enterprise AI deployments. Building a strong open ecosystem strategy will be valuable, but challenging. Some key areas that vendors must address include the following:

- Vendors must link open ecosystem R&D investment budget with monetization model to convince internal stakeholders of the potential value that strategic contributions and investments could create.

- Define internal open-source strategy to ensure that all contributions and investment are aligned closely with monetization targets. Retaining tight control over contributions is necessary.

- Build strong brand awareness around open-source given its positive market perception.

- Work across the ecosystem to build comprehensive toolkit for developers with coding libraries, models, and toolkits.

- Vendor developer support must extend beyond open-source contributions and target other developer barriers through funding, hardware resource access, and expert knowledge. This will help create a strong ecosystem of startups and developers building enterprise-ready applications.

-